At its best, science is a self-correcting march toward greater knowledge for the betterment of humanity. At its worst, it is a self-interested pursuit of greater prestige at the cost of truth and rigor — Ed Yong

Scientific progress appears to be slowing, and scientific results seem to be less disruptive than in the past. A popular explanation for this trend is that young scientists (or newcomers to a field) must master an ever-growing body of knowledge before they are capable of generating their own new ideas. This ‘burden of knowledge’ theory views scientific fields as akin to oceans. Every day, as new discoveries are made, more and more knowledge is poured into the ocean, permanently raising the sea level. A scientific neophyte starts at the seabed and must swim their way upwards to reach the knowledge surface, whereupon they can finally contribute to advancing their field.

But, this model of science as an ever-growing mass of facts is not how things really work. Scientific knowledge is more like a garden continually tended by a community of practitioners. Old facts and models are weeded out because they outlive their usefulness — or they are simply forgotten, lost in the overgrowth. A scientist doesn’t need to know everything about a field before they can contribute; it’s possible to tend the petunias without knowing about the lilacs on the other side of the garden.

Yet, something about science does seem to be getting harder with time. One possibility is that we’ve picked all the low-hanging fruit, and each new discovery requires a steadily growing investment of time and resources. But, what if the burden is not technological, but social? Perhaps it’s not knowledge that scholars must accumulate in ever increasing quantities, but prestige.

At least, that’s the hypothesis I came away with after reading Mark Khurana’s book “The Trajectory of Discovery”1. Khurana, a researcher at the University of Copenhagen, was motivated to write his book after a frustrating encounter with the biomedical research enterprise. As he recounts his inciting incident:

With a completely fresh set of eyes, research initially seemed to be a pure pursuit of truth. Over time, however, this sentiment slowly morphed from admiration to frustration, not toward individual researchers, but rather toward the system more broadly. It seemed to me that there was a vast amount of scientific potential that was going to waste, simply because the incentive structures underlying the academic community seemed to reward the wrong things.

“The Trajectory of Discovery” is an attempt to catalogue the distinct factors that slow down, or speed up, scientific discovery in the biomedical sciences. For example, a chapter on “Citations as Currency” covers how using citations as a measure of scientific value can incentivise scientists to pursue projects on the basis of expected popularity, not necessarily scientific value. All in all, Khurana covers 23 different factors including ‘p-hacking’, the streetlight effect, and outright fraud. The value of “The Trajectory of Discovery” is primarily as a reference work, and as a collection of (interesting) facts — the creation of a unified theory on what is slowing biomedical progress is left as an exercise to the reader.

So, here’s my take: I suspect that many of the individual challenges that Khurana mentions ultimately stem from an over-reliance on prestige as an axis of competition for limited resources.

Science is a social enterprise. In biomedical science, unlike say, maths2, results cannot practically be proven to be true in any definitive sense. What is thought to be true is then a distillation of group consensus among a relevant social collective of scientists.

Before you can ‘do science’ and be accepted into the community, you must first signal potential to be a valuable contributor. In theory, anyone can experiment in their backyard and publish the results. In practice, the citizen scientist is a relic of a bygone age, replaced by the professional scientist class. The social collective decides who to let into the garden, so to speak.

So how does one get recognised by their peers? Ideally, the quality of a scientist could be evaluated by looking at their track record. But unfortunately, science is not like sprinting — the skill of science is hard, if not impossible, to quickly and objectively measure. The problem for new scientists is that scientific skill is mostly judged retrospectively; it takes decades to judge the quality of a scientific career and a body of work. Nor can we simply give everyone free reign and judge them later. Modern biomedical science is expensive, complicated, and time consuming; limited funding resources must be allocated.

What is needed is a valid predictor: a way to distinguish good from bad scientists at the outset. This is essentially what prestige is, a signal of quality; prestige is a sort of currency or capital used to compete for limited resources. The artefacts of prestige include degrees from highly ranked universities, Nature/Cell/Science publications, NIH3 grants, and highly-cited papers. Khurana refers to this as "scientific capital": the collection of research experiences, publications, and relationships accumulated over a scientific career. The point of prestige, from the perspective of a funder, is to serve as a predictive signal of valuable future research output4.

If we think of a graph with two axes, scientific results on one and prestige on another, we can plot the main dimensions along which scientists compete. This dual-axis framing isn’t new, as Khurana notes:

Already during the 1970s, the French philosopher Pierre Bourdieu had considered how different actors in the academic world interact with each other. He believed that actors in academia, referred to as Homo Academicus, fought for legitimacy on two fronts. On the first front, referred to as the autonomous pole, academics fight for intellectual and scientific capital. On the second front, referred to as the heteronomous pole, academics fight for economic and political capital.

If prestige was a good predictor of the ability to do important science, we’d see a strong correlation between performance on both poles. Early prestige accumulation should predict future results — and we do see this in the data (although, there’s likely to be a ‘rich get richer’ effect at play). At its best, prestige is a form of evaluation via the wisdom of the crowds. But, as anyone who has been involved in science knows, prestige does not always accumulate where it should.

Invoking Goodheart’s law, there is a big potential problem with prestige as a predictor: if the returns to activities that generate prestige are greater than the returns to activities that generate scientific breakthroughs, scientists are incentivised to optimise for prestige instead. If a system becomes good at conferring and measuring prestige, it will disproportionately attract status-seekers who value prestige over the outcomes we’d prefer them to value (like scientific truth-seeking). Critically, status-seekers are unlikely to think they’re doing anything wrong — they are simply responding to incentives. It is not unreasonable to believe that work is valuable because it gets rewarded. As a result, incentives shape the values of people participating in the system.

Status-seeking behaviour is tolerable if there are enough resources to go around to keep everyone occupied; the status-seekers may take more than their fair share, but at least everyone else can eat. Given enough time, the best scientists would rise to the top anyway.

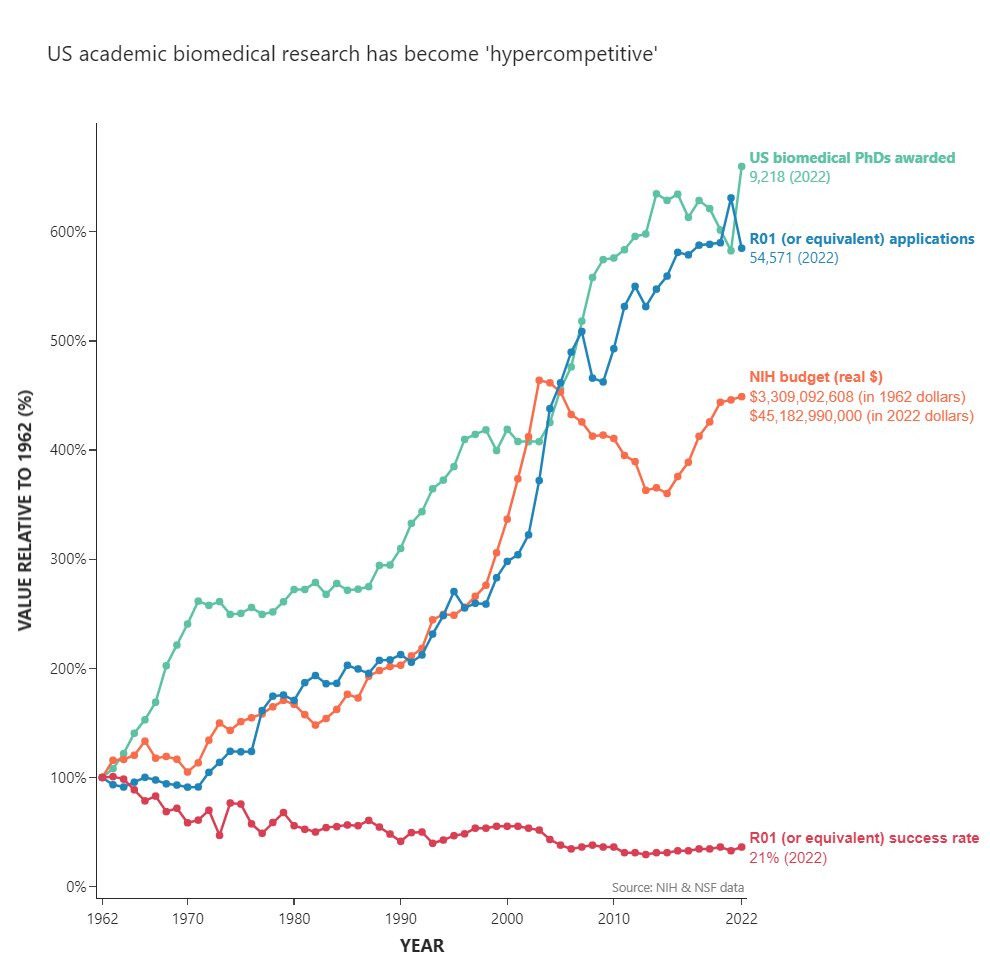

The problem with this, at least in biomedical fields, is that scientists are increasingly in competition for scarce resources. Khurana notes the severe and growing mismatch between number of scientists trained and the supply of full-time positions. Funding has not kept pace with the number of graduate scientists; the NIH’s budget has stagnated in real terms, following a funding boom and subsequent bust through the late 90’s early 2000’s.

As a consequence of this mismatch in the supply and demand for funding, modern academic biomedical research has become “hypercompetitive”. Khurana mentions several issues downstream of this increased competitiveness: a culture of ‘publish or perish’ leading to a proliferation of incremental or low-quality papers, exploitation of the graduate student workforce, burnout, and high attrition.

Because prestige is an input into funding decisions, young researchers are being choked out by a system that has little room left to accommodate them. Science is ageing, driven by lengthening training periods and an exodus of young talent. The average age of scientists who receive their first significant NIH grant (an R01) is now in the 40s. In Khurana’s words:

With researchers spending ever more time writing grant applications, resources being concentrated within too narrow a band of individuals, and too few positions available for the supply of trained researchers, there is ‘a growing gulf between the aspirations of biomedical researchers, and the career prospects that are open to them.’

With no track record, early career scientists must start off by accumulating prestige from their degrees, who they work with, and what they publish. They can then ‘cash in’ this prestige for the resources required to do scientific work (grant funding, lab space, support staff, etc.). In the past, the ‘prestige price’ to enter into the scientific community was relatively low. The system breaks when the bar to entry — the amount of prestige that must be accumulated to be allowed to do work — rises to the point that new scientists must spend more time on prestige accumulation than anything else. This results in a pipeline where prestige is used to accumulate more prestige, and the true purpose of science is lost in the search for ever-greater prestige.

A core problem with incentivising scientists to value prestige is that it can be accumulated by validating the status quo, since prestige is awarded by the now-eminent scientists who established that status quo in the first place. At its worst, this dynamic can incentivise scientists to stifle competing ideas and even commit fraud — a prominent example of this being the “cabal” of scientists who stymied research into non-amyloid-beta causes of Alzheimer’s for decades.

A less overtly harmful, but perhaps more insidious effect of overvaluing prestige is missing out on valuable novel ideas. Because genuinely novel ideas are harder to judge in the social validation system of science, they are inherently low status (e.g. Katalin Karikó as an ‘anti-prestige’ role model). Young researchers in particular are a source of novel ideas, and their exodus ossifies science.

Khurana dedicates several chapters to this idea that novel ideas are discounted by the scientific establishment. As evidence he cites the tendency for interdisciplinary work to be published in lower impact journals, and the “pivot penalty” experienced by researchers who shift focus areas. However, it is in the grant-making process where the novelty discount is most impactful. Major funders, including the NIH, are notoriously conservative, and often prefer to fund higher-status work with more predictable outcomes. Funder risk-aversion is plausibly linked to resource availability — by one measure of novelty, the NIH reduced funding for novel ideas when its budget was cut in real terms (around the year 2000).

Graph from M. Packalena and J. Bhattacharyab

The novelty discount is just one example of how the funding system is at the heart of the issues caused by an over-reliance on prestige-based evaluations. In Khurana’s words:

The problem with the structure of grant funding processes and the subsequent creation of different classes of researchers is that we are lulled into believing that the system is purely meritocratic when it in fact is not. We have chosen a narrow set of criteria to define previous successes, and by extension to define merit. But these criteria inevitably lead to a stratified research environment where future research ideas are funneled through a sieve of preexisting eminence, meaning that the imbalance in the grant process skews toward prior prestige more so than the quality of the idea.

Of course it’s easy to say there are problems with using prestige to help allocate funding, but what’s the alternative? The answer isn’t trying to stamp out prestige-based evaluation in science — even if we could eliminate prestige, whatever we replaced it with would become the new prestige.

Personally, I’m partial to solutions that increase the diversity of funding sources for scientific research. The biomedical research funding ecosystem is dominated by a small number of risk-averse mega-grantmakers like the NIH. This concentration arguably gives funders too much power, which is part of the reason why researchers need to spend half of their time writing grants instead of getting on with research.

Compare this to the start-up funding ecosystem, which is a much more distributed market; if a founder strikes out with a particular angel or venture capitalist, there are plenty more who might be receptive to the founder’s vision. Because venture capitalists need to compete for access to the best deals, they are also plausibly much more accommodating to entrepreneurs than scientific funding institutions are to the scientists they support.

The benefit of encouraging greater diversity in biomedical research funding sources — whether by founding new focused research organisations (FROs), or breaking up the NIH — is that it engenders a diversity of funding criteria. Because different organisations are likely to value different things when making funding decisions, it’s harder to game the system. The more diverse the decision makers, the more diverse the portfolio of funded research — and hopefully, the lower the burden of prestige accumulation.

Thanks to Jake Parker for giving feedback on a draft of this piece

The full title is “The Trajectory of Discovery: What Determines the Rate and Direction of Medical Progress?”

Yes, I’m aware of Gödel's incompleteness theorems

The US National Institute of Health. I mostly talk about the US in this piece because the NIH is by far the most significant funder of academic biomedical research globally

Although Robin Hanson has argued that funders are not necessarily buying outcomes, but prestige and status by association

Great article Alex! Do you think there's an argument to make for even larger groups and common research directions in biomedical research to counter the burden of prestige? The idea would be that you don't have to fight for the limited funding/prestige to start your own lab and can instead join a larger organization.

I ask because from my experience in theoretical physics and computer science, it's much cheaper to fund a bunch of people trying very different things. As you write in your essay though, biomedical research is expensive. It seems like having a bunch of small labs doing different (maybe overlapping) things would be ideal, but isn't once you factor in funding. With consolidated groups, you would relieve some of the big costs in running experiments and getting the appropriate equipment, right?

I like the employment of metaphors. It's a different style from most scientific writing, and it offers a fresh perspective.